Safe, Untrusted Agents for Big Data Infrastructures

Introduction

Our new research paper, Secure and Safe AI Agents for Big Data Infrastructures, has been accepted to S2AI@BigData 2025. It will appear as an IEEE-affiliated paper and will be presented in Macau on December 8 to 11, 2025. The preprint is available on arXiv, and the underlying prototype is open source.

- Paper: https://arxiv.org/abs/2510.09567

- Workshop: https://s2ai-bigdata.github.io/

- Code: https://github.com/BauplanLabs/the-agentic-lakehouse

Rethinking automation in the Lakehouse

By 2025, the lakehouse has become the standard architecture for analytics and AI. It offers clear separation of storage and compute, consistent table semantics on object storage, and first-class support for Python and SQL.

As developers spend more time working with AI agents in their IDEs, a natural question follows: if LLMs are getting better at reasoning and tool use, can agents manage parts of the data lifecycle inside a cloud lakehouse?

This is not simple. Lakehouses coordinate sensitive production data across many teams and components (storage, compute, orchestration, schemas, and user code). These systems are built for long-lived human workflows, not for one-off automated actions. Identifying safe tasks for agents is difficult because the surface area is large and the stakes with data are typically high.

We think that data pipeline management is a very good candidate. Data pipelines are complex stateful objects that notoriously fail frequently, often for reasons that are hard to diagnose. At the same time, they are also structured enough that an agent could in principle operate within clear boundaries.

In the paper, we show how a lakehouse can operate as a fully programmable environment where pipelines are defined and executed as code. The key abstractions isolate execution, control I/O, and enforce transactional behavior, which creates the trust boundaries needed for safe automation.

Finally, we show the idea in practice. Using Bauplan, we open sourced a prototype where an agent investigates a failing pipeline, applies a fix, and proposes it for merge only after passing a deterministic verifier. The point is to demonstrate that safe agentic behavior is possible today with the primitives already present in a modern lakehouse.

Trusting your AI systems

"Love all, trust few."William Shakespeare

A modern data lakehouse is a system where storage, compute, schemas, orchestration, and user-written code all interact at the same time. These interactions create two risks that show up constantly in real-world data engineering.

The first risk is unsafe execution. Pipelines mix code with different dependencies and assumptions, so a single job can affect others through drift or uncontrolled I/O. If an agent is allowed to create or modify tables, the risk grows. Without strict data and runtime isolation, an agent can disrupt the assets that downstream systems depend on.

This is why strong isolation for both data and runtime is a requirement for AI systems to manipulate data. If each transformation does not run in a clean, reproducible environment that is prevented from interfering with others, we cannot guarantee that those AI systems won’t cause any harm.

The second risk is inconsistent data. Pipelines read from production and write back into production. When a run partially completes, fails in the middle, or applies incompatible changes, downstream systems end up seeing a mix of new and old tables. This creates silent corruption, cascading failures, and dashboards that tell conflicting stories depending on which tables updated first.

Transactionality is the mechanism that prevents this. A pipeline needs to function as a single atomic unit: either everything completes successfully and becomes visible, or nothing does.

These two properties, isolation and transactionality, determine whether AI automation is even possible on a data platform.

The Lakehouse as a programmable execution environment

Bauplan’s philosophy is that the lakehouse is not just a query system: it is a programmable execution environment. The idea is to expose the entire data lifecycle through code: pipelines, queries, environments, permissions, and runtime inspection all live behind simple APIs. This creates a single interface that humans, cloud systems, and agents can use without dealing with hidden and ad hoc infrastructure.

Declare your pipelines

A pipeline is a directed graph of transformations that start from raw tables and produce new data assets. In Bauplan, each transformation is a standard Python function. The function declares its inputs, its environment, and the table it generates. The platform handles isolation, dependency resolution, and the physical I/O. Because environments are declared explicitly, two functions can run with different Python versions or dependency sets without interfering with one another. Code becomes the definition of both logic and infrastructure, and the surface area stays small and predictable.

Execution follows the same model. Running a pipeline does not require Docker, Terraform, or cluster provisioning. But pipelines must still satisfy a difficult requirement: they need to manipulate production data without ever putting production at risk because a failed run can leave partially written tables, inconsistent states, or outputs that briefly exist before being rolled back by hand.

Git for data as a ledger

To solve this, we treat every pipeline run as a controlled state transition, not as a set of ad hoc writes. Before a run starts, the platform creates a dedicated copy-on-write branch derived from the current state of production. The run reads production data but writes only to its own branch. If the run completes successfully, its changes can be merged into main in a single atomic operation. If anything fails, nothing is merged. Production remains untouched.

With this in place, the branch-and-merge model starts making sense. It gives deterministic reproducibility because each run is tied to a specific commit and code snapshot. It gives isolation because failed runs never contaminate production. It gives reversibility because every state change is represented as a commit that can be rolled back. In practical terms, it applies familiar software engineering principles to the lifecycle of data.

These properties also make the lakehouse naturally agent-friendly. Agents need to inspect runs, read logs, query tables, create branches, and propose changes. In a programmable lakehouse, all of these capabilities already exist as typed and documented APIs. Exposing them to agents is simply a matter of routing the same methods through an MCP server. No special privileges or hidden channels are required. The agent interacts with the system exactly as a human developer would.

This stands in contrast to traditional stacks, where developers must move between Spark clusters, notebooks, SQL consoles, warehouses, and scripts. That fragmentation is a challenge for humans and impossible for agents to navigate safely. A programmable lakehouse removes that fragmentation. It provides one predictable interface grounded in code, which is why programmability is the foundation for safe automation.

Can an agent repair a broken pipeline?

Once we have isolation and transactionality in place, the natural question becomes whether an agent can operate inside these boundaries and repair a real pipeline failure. To explore this, we built a small prototype that exercises the exact workflow a human engineer would follow.

We start by introducing a controlled failure. In our experiment, the error comes from a dependency mismatch around the release of NumPy 2.0, which broke many containers that used pandas 2.0. This kind of problem is common in real systems, painfully time-consuming to debug, and a perfect scenario for testing agentic behavior.

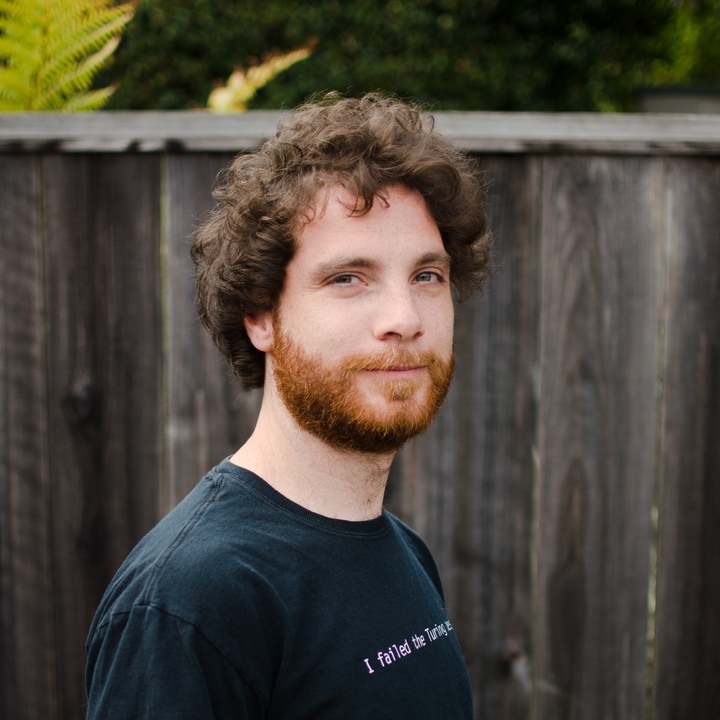

The prototype uses Bauplan as the programmable lakehouse, with all of its APIs exposed to the agent through an MCP server. The agent runs on top of smolagents, a lightweight ReAct framework that handles the reasoning steps, tool calls, and logs inside Python. LLM inference is provided through a simple LiteLLM interface, which makes it easy to switch between models from OpenAI, Anthropic, and TogetherAI.

With this setup, the agent behaves like an engineer would. It inspects the failing run, retrieves the logs, checks the types and schemas of upstream tables, creates a debug branch derived from production, applies a fix to the environment declaration, runs the pipeline again, and waits for the output. Before anything can reach production, a verifier function inspects the resulting branch and decides whether the tables satisfy the expected conditions. Only if the verifier returns true does the change become eligible for merge.

In practice, this small example hits every abstraction introduced earlier: declarative functions, isolated environments, copy-on-write branches, atomic merges, and a narrow API surface that both humans and agents can reason about. It also exposes something important about current LLMs. Different models vary widely in their ability to complete the repair. Some succeed quickly. Others struggle or fail. Yet none of these failures cause damage, because the lakehouse keeps their actions contained.

It is also clear from the prototype that having an MCP server is not enough. Many leading stacks, such as dbt running on Snowflake, provide tools and metadata but do not allow agentic repair. Without isolation and transactional semantics, an MCP route becomes a thin wrapper around a system that cannot guarantee safety. Programmability plus isolation, not MCP alone, is what makes the automation viable.

Finally, because model selection sits behind a simple configuration, it is easy to imagine workflows where different models are used for different stages depending on cost or time constraints. The branch-based concurrency control also makes it possible to run several agents in parallel without risking interference.

.png)