Data Lakehouse

Unify your data for analytics, machine learning, and AI on a single scalable, open foundation. Without the complexity of a platform team.

Unify your data for analytics, machine learning, and AI on a single scalable, open foundation. Without the complexity of a platform team.

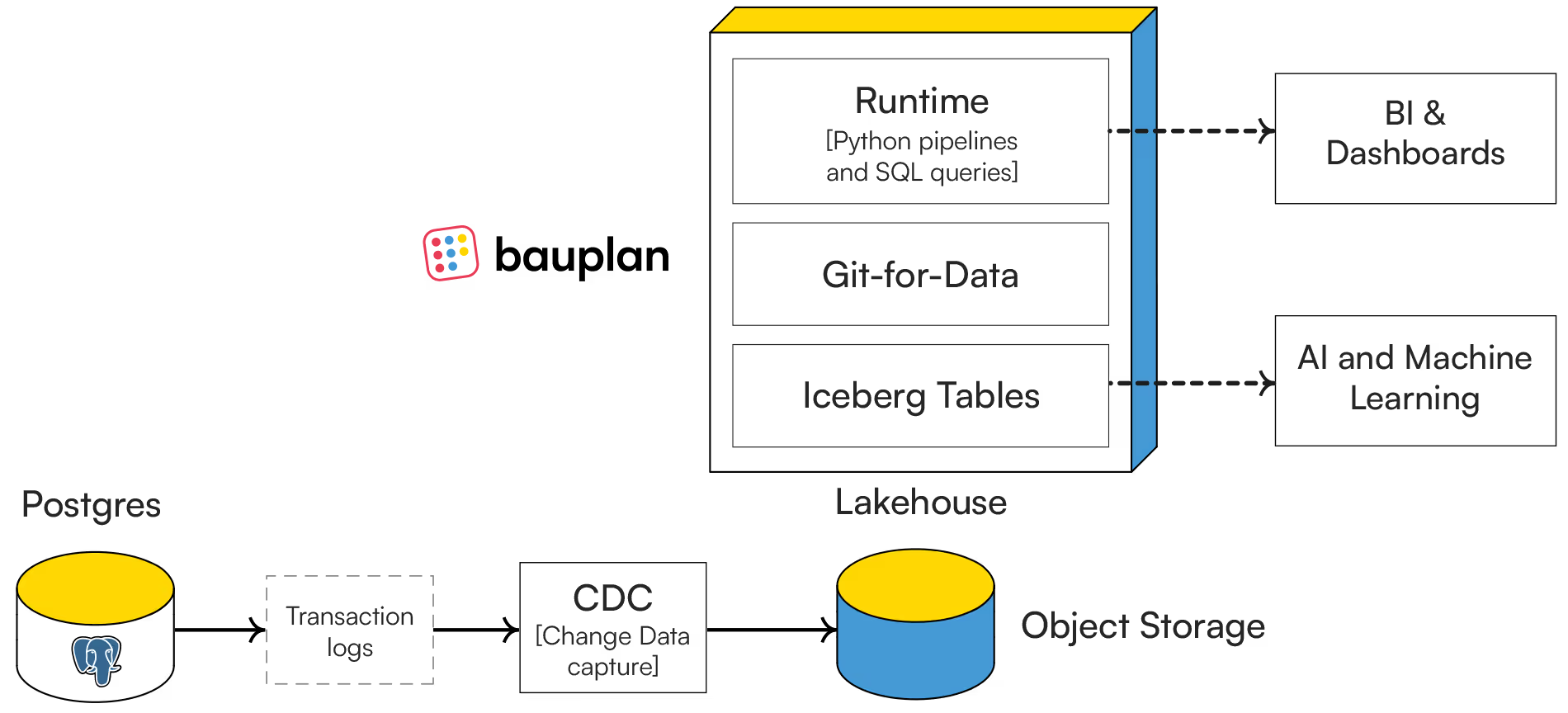

Raw files (Parquet, JSON, CSV) live directly in object storage.

Table formats like Apache Iceberg add transactions, schema evolution, and time travel.

Engines and frameworks stay separate from storage, so you can scale and adapt easily.

Consolidate scattered databases into one system for analytics and AI on open formats in object storage.

Start small and scale to larger data volumes as your needs evolve, without getting locked into a vendor.

Combine SQL for queries and joins with Python for custom logic, feature engineering, and agent-driven workflows.

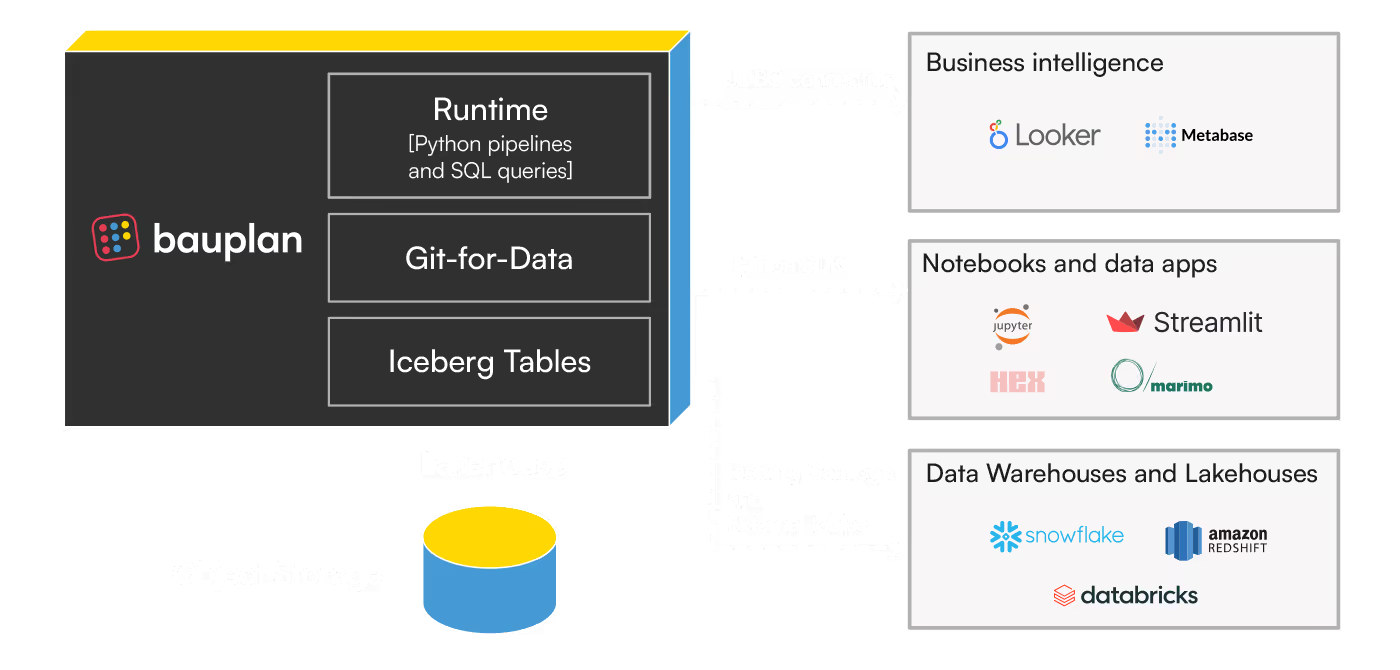

Bauplan unifies Iceberg tables, branching, and execution in one system. Spin up branches for medallion layers, run DAGs in isolation, and merge back seamlessly. No catalog wrangling, no custom glue code.

Use pure Python and SQL. No Spark, no JVM, no cluster overhead. Bauplan handles execution with Arrow under the hood—you focus purely on transformations and logic.

Bauplan brings Git-style workflows to your data. Branch, commit, and merge tables and pipelines with the same ergonomics developers already know. Every change is versioned, isolated, and reversible, so you can experiment safely and deploy with confidence.

No proprietary DSLs, no siloed UIs. Your Bauplan project is just a Python repo: data infrastructure is declared directly in code, reproducible, testable, and automation-friendly. No Dockerfiles, no Terraform script, no divergence between dev and prod.

Capture change data from your source systems directly into S3, GCS, or Azure Blob. Learn More

Ingest into Iceberg tables with Write-Audit-Publish semantics: isolate in a branch, run validations, then merge. Simple, robust, and safe.

Write Python functions and SQL queries. Use Polars, Pandas, or DuckDB for fast, expressive transformations. No Spark, no DSL, no docker, no Kubernetes.

Run both synchronous queries and asynchronous jobs on Bauplan’s runtime. Develop interactively, then scale up without changing your code.

Run pipelines in Bauplan, then expose curated Iceberg tables to Warehouses, Lakehouses and SQL engines, or connect tables directly to BI tools and query with Bauplan, or use our PySDK to work in your favorite notebook platform.