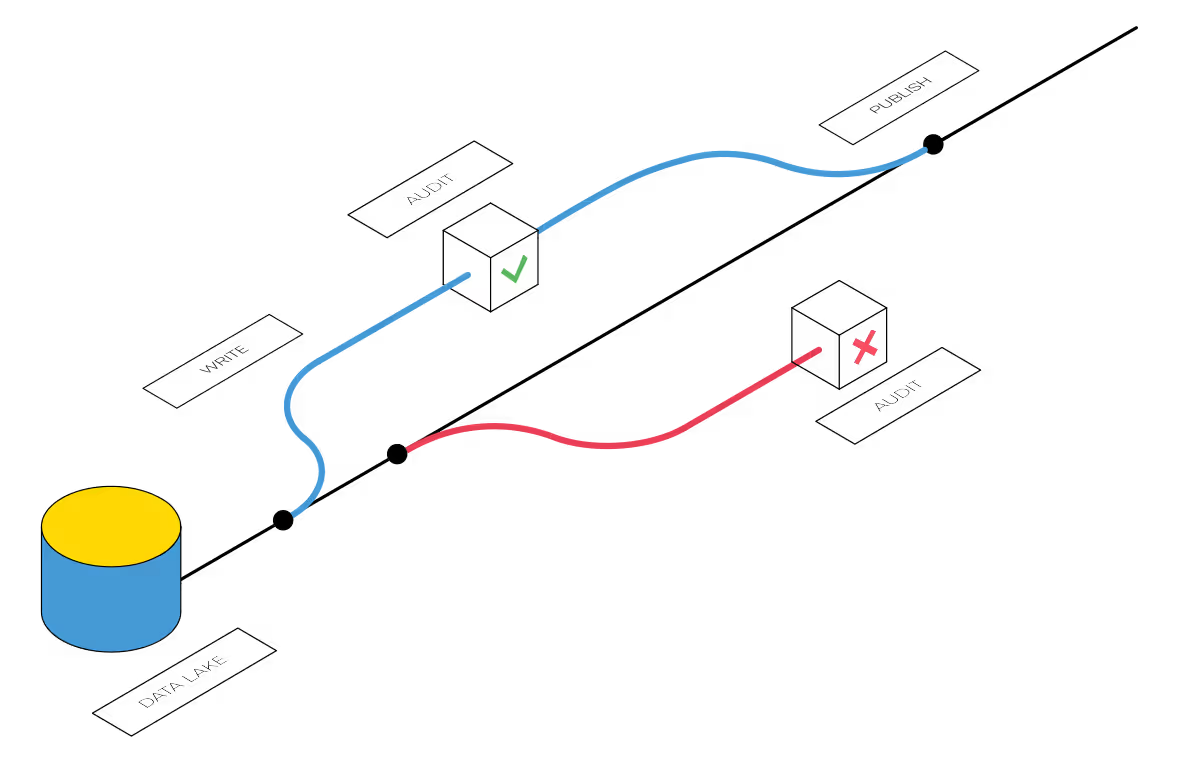

Write, Audit, Publish.

Ship Data Safely to Production. Every data change tested. Every deployment validated. Zero production incidents.

Ship Data Safely to Production. Every data change tested. Every deployment validated. Zero production incidents.

Changes are written to an isolated branch of your data lake, not in production

Automated tests are performed to validate data quality and business logic

Only the data that pass the tests is merged in the production data lake

A type mismatch, a missing column, or a bad Parquet file upstream can silently poison dashboards, models, and APIs. By the time anyone notices, it’s 2 AM, and the incident is already burning hours of triage while eroding trust with stakeholders.

Bauplan gives you WAP out of the box: one line to branch, one line to test, and one line to merge. Every change runs in isolation, gets audited automatically, and only lands in production when it’s proven safe.

Changes are tested in complete isolation. Bad data never reaches your dashboards, ML models, or downstream consumers. Your data SLAs stay intact.

No more 2 AM rollbacks or weekend firefighting. Developers work with familiar git-like workflows: branch, test, merge. What took months to implement now takes hours.

Define data quality tests and expectations using Bauplan's standard expectations library or writing your custom tests. Every merge automatically validates data quality, before reaching production.

Every change is versioned. Made a mistake? One command rolls back to any previous state. Everything in your data lakehouse has now an undo button.

Create unlimited test environments without storage costs.

Each branch is a full zero-copy of your data lake that uses no additional storage.

Run quality checks with Bauplan's standard expectations library, custom Python logic or SQL assertions. Bauplan checks the quality of your data at runtime, before creating the data asset so you don’t waste time and compute.

Merges are transactional and gated by expectations. Either all changes apply with validated quality, or none do. Production stays consistent even if merges fail.