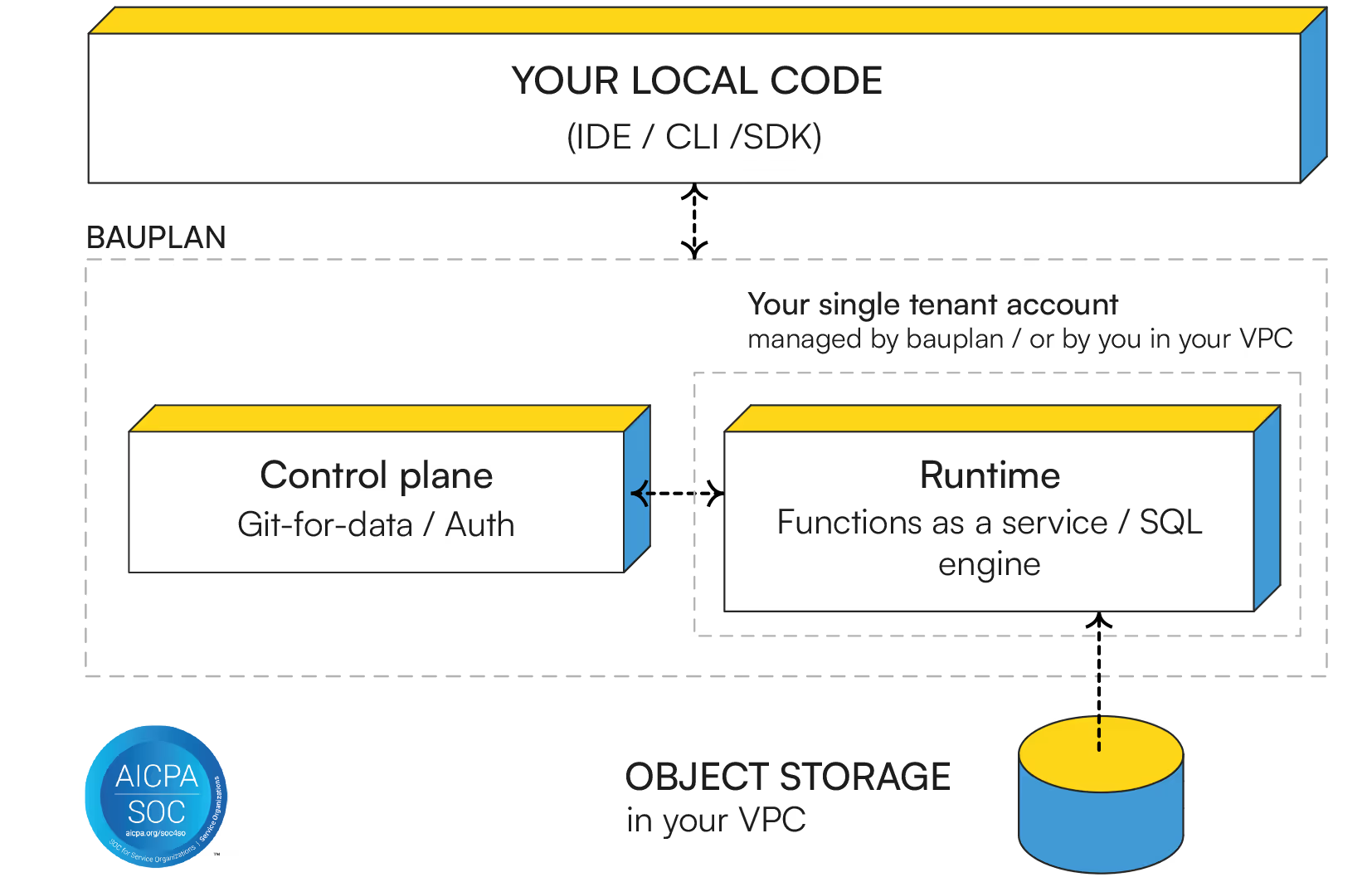

The lakehouse for AI-native data engineering

Build data pipelines from your repo with AI coding assistants. Bauplan turns data changes into branch-isolated runs and atomic publishes. All exposed as simple APIs your IDE, CLI, and reviews can reason about.